Due to technical changes brought to search engines, Google has updated its recommendations for webmasters. The main functional innovation of this year is that the indexer has started to process pages with included JavaScript and CSS almost like a modern browser. It means that Google crawlers use JavaScript and CSS files to render page content for further indexing. Subsequently, a developer has to offer full access to such files and ensure they can be processed quickly by search engines and browsers. Apart from quicker indexing, it improves website usability.

To reach optimum web indexation, we advise not to hide external images, JavaScript, and CSS applied on the site in robots.txt any longer. Giving the search robot full access to them, you exclude the probability of their incorrect recognition and influence information sampling of the analysis.

Initially, the indexing had been developed by the same rules as text internet programs like Lynx, the key factor was presence and availability of text on a web page. Now that it works by analogy with internet browsers, it is quite important to focus on some points:

- Just like modern browsers, search robots do not support all functions used on web pages. For the required content and key technologies of the site to be recognized, its design should correspond to recognition principles. It is necessary to consider that not all features and structures are currently supported.

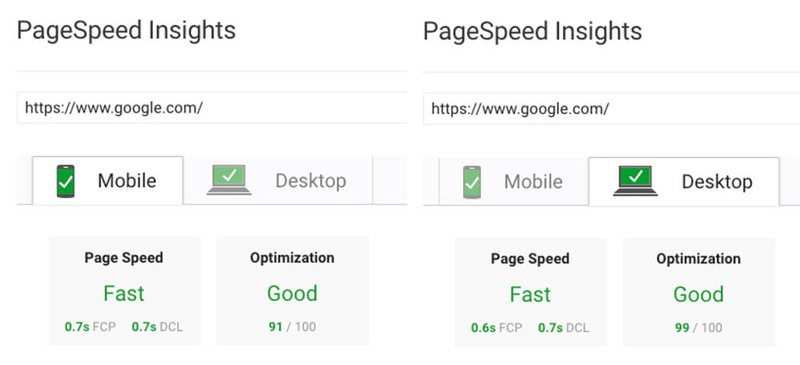

- If you ensure that web pages load quickly, you facilitate not only the life of users but effective web indexation of site content for ranking system as well. Besides optimization of cascade tables and files with content, Google recommends clearing the site of unnecessary queries and loadings.

- You should also take care that the server could cope well with additional uploads of containing scripts and the styles by bots.

In its panel, Google has given an excellent possibility to see the site with the eyes of a search engine. Choose in tools “See as Googlebot”, and trace the way of processing various internet pages. In this process, it is possible to notice some interesting methods of indexation. For web developers, this is a great chance to improve the performance of their websites. In the upcoming 2021, the webpage speed and user experience will play a much greater role in indexing. The faster the page is and the better it allows users to interact with the elements, the higher your ranking is. Combining powerful content and impeccable site performance is crucial for a top-notch user experience.

Comments